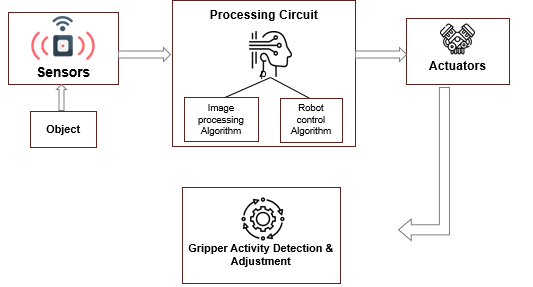

Industry 5.0 advancements have turned Gripper systems into a vital manufacturing tool. Now, they have universal applications in picking and positioning products with varying forms (e.g., fragile objects), textures, or weight. Intelligent gripping systems combine data from various sensors to generate information using data correlation and facilitate the decision-making process. These gripping systems can handle pieces independently and refine the underlying algorithms as per requirement. The intelligent system comprises an image processing algorithm that processes the images collected from sensors to detect various objects, a robot-controlled algorithm to regulate the motion of the gripper on the basis of obtained feedback, and an actuator to guide precise movements of the gripper finger. All these parts come together to increase the accuracy of the gripping system.

The integration of artificial intelligence and deep learning algorithms in gripper systems addresses many existing challenges:

- Accurate identification of objects.

- Precise estimation of grasping force for holding objects.

- Automatic adjustment of gripper characteristics based on detected objects.

- Faster identification of the gripping angle for the identified object.

- Faster grasping detection of an object based on real-time data.

- Human detection in the robot’s workspace for safe human-robot interaction.

Soft robotics, Schunk, Onrobot, Visevi robotics, Tyson, etc., are some of the leading industry players that are manufacturing AI-based gripper systems for various industrial sectors like food & beverage, health care, automotive, aerospace, etc.

Why is AI Being Integrated into a Gripper System?

Gripping systems were initially meant only for extremely simple jobs. They are based on traditional programming, which necessitates the creation of rules and templates. In practice, there are various scenarios where too many variables need close consideration. It complicates the rules used for gripping systems due to their traditional programming and restricts their usage. Equipping the gripping systems with intelligence is the only way to uplift this restriction.

Artificial Intelligence (AI) based systems can learn from a predefined training set and multitasking they do regularly. These systems can grasp the circumstances based on the training and situation and take appropriate actions. It also enables complete automation of the gripping systems.

Components of an AI-Based Gripper System

An intelligent, gripping system consists of advanced sensors, a processing unit, and an actuator. Advanced sensors, such as three-dimensional cameras, LIDAR, ultrasonic sensors, tactile sensors, and air pressure sensors, provide input to AI-based algorithms in a gripping system.

- The processing unit processes the data from various sensors and analyzes it to provide actionable insights. Common algorithms used for data processing are:

- Image processing algorithm– Some of the most popular image processing algorithms are morphological image processing algorithms, convolution neural networks, YOLO & ROLO (Recurrent YOLO), special pyramid pooling (SPP), single shot detector (SSD), region-based convolutional neural networks, etc.

- Robot control algorithm– The most popular robot control algorithms are sample-based motion planning algorithms, hand-eye calibration algorithms, grasping assisting algorithms, closed-loop slipping control algorithms, etc.

- Actuation systems may include motors, piezo-electric based actuation, pneumatic and vacuum actuation, shape memory alloy actuation, electroactive polymer, electro-adhesive actuation, etc.,

Challenges and Solutions

Applying an AI-based algorithm to a gripping system can resolve several challenges. Some are listed below:

A smart gripping solution should have the ability to adjust its force according to the type of object placed as its target. Therefore, it is important to precisely calculate the grasping force needed to hold objects of different shapes and sizes.

Solutions to the Problem

- GQ-CNN (Grasp Quality Convolutional Neural Network) model: It helps calculate grasping force and detect a concerned object. The algorithm is applied to a grasping situation in the following manner:

- The GQ-CNN model ranks potential grasps using grasp robustness (using object position and surface friction), which represents the probability of grasp success predicted by mechanics models.

- The grasping apparatus estimates the force needed for the identified object according to a predefined threshold

- Once the grasping apparatus comes into contact with the object, it utilizes force control to adapt the grip force based on the identified object

- Ensuring the safety of human-machine interaction is of utmost importance

- A deep learning network in integration with grippers can help detect vulnerable situations and provide safety to humans. The working of one such system is summarized below:

- To detect humans in the environment, we utilize RGB-D cameras. Additionally, we employ a human action recognition (HAR) network and a collision detection network (CDN) for human recognition and collision detection

- To detect the presence of a human, we utilize a 3D-CNN network as a Human Action Recognition (HAR) network. Additionally, we employ a 1D-CNN network as a Collision Detection Network (CDN) for collision detection.

- The central decision-maker (CDM) system combines the results from both networks to determine the level of safety for humans

- The system generates feedback based on the CDM results and sends it to the gripper to prevent collisions, ensuring human safety

Advanced Features

Tactile Sensors in the Gripping System

- A tactile sensor is a touch sensor that provides information regarding the characteristics of an object in contact with the sensor

- The sensor can detect slippage and adjust the suitable clutching force to keep objects from dropping when attached to a robot’s parallel gripper jaw

- The sensor helps in edge detection and secures objects of varying geometric shapes

Advanced vision sensors

The vision sensor helps in the automatic depth compensation of the gripping system by detecting the geometry of the object placed on the work surface. The automatic modification of the circular movement of gripping fingers can be based on the detected geometries.

Some of the prominent vision sensor technologies used in the industry are:

- 3D sensing camera sensors

- Time of flight sensors (such as ultrasonic, radar, LiDAR, etc.)

- Radiometry sensors (such as IR, UV, and X-ray sensors)

- Depth cameras

- RGBD camera

- Stereo vision system for object recognition and in-depth information.

Key Products

Market players are continuously improving technology by introducing more advanced features that increase the efficiency of their products. Some prominent players in this field are Schunk, Tyson, Tacniq, Tegram, and OnRobot.

Below, are some key products along with their features:

- Schunk, a German company, has developed a Co-Act JL1 gripper with features like

- The camera is mounted between the fingers to detect and search for objects

- Tactile sensors for detection and differentiation between workpieces and humans

- Optical feedback for current gripper status and workpiece identification

- Capacitive sensor for collision prevention

- Safe force limitation to exert the right gripping force for every kind of handling

- Tyson, a US-based Food & Beverage Company, has developed Soft gripper technology for food picking and packing. Some of its prominent features are:

- 3D vision and artificial intelligence (AI) enable hand-eye coordination in industrial robots, allowing automation of bulk-picking tasks.

- It also helps pick and sort food items that are either delicate or irregularly shaped.

Conclusion

MEMS grasping, production lines, prosthetic arms, automotive sectors, surgical applications, vegetable pickers, etc., have long utilized grippers/end-effectors as highly adaptable components. Integration of artificial intelligence, deep learning, advanced vision sensors, and other features makes the intelligent, gripping system more efficient as it reduces the response time and increases the accuracy of the gripping system. In the coming years, incorporating an AI-based approach into the gripping system will address more challenges. Exemplary algorithms such as faster RCNN, granulated RCNN (G-RCNN), multi-class deep SORT (MCD-SORT), etc., may be used in the near future for object detection and tracking.