Evolution of 3D Sensing Technology Helping Machines

3D Vision technology enables machines to understand and interact with real-world environments. It does that by digitizing features and objects in their environment with minute details. The process by which it senses 3D objects is called the 3D sensing technology.

3D sensing technology provides high accuracy and long-range applications, making it the primary solution for addressing large market segments. It includes techniques such as stereo vision, pulsed ToF, structured-light depth-sensing, laser triangulation, phase-shift or amplitude-modulated time-of-flight, and the latest technology, coherent 3D sensing.

Coherent 3D sensing technology uses the FMCW LiDAR technique. It’s the newest of these technologies and has overcome the maximum challenges of previous methods, such as excellent light performance. Also, multi-system interference, eye safety, fast measurement time, and many more expand the vision systems into the fourth dimension.

Some market players that use the FMCW LiDAR technique in their 3D vision systems are Mobileye Global Inc., SiLC Technologies, Hokuyo Automation, Voyant Photonics, Aeva, Lumentum, and many more.

Evolution of 3D Sensing Technology

The development of 3D cameras and 3D sensing technology has opened up a wide range of new applications. Additionally, they perform as the “eyes” of machines, providing a 3D spatial representation that allows automated decision-making based on an object’s position, size, and orientation.

3D sensing captures real-world objects’ length, width, and height with enhanced clarity from a distance. The existing 3D sensing techniques suffer significant trade-offs in eye safety, bandwidth, range, crosstalk immunity, and accuracy. Eye safety is a big concern and limits the amount of power and the operating range.

3D Sensing Technology: Techniques

A brief description of a few of the techniques is described below:

- 3D Stereo Vision: It uses a pair of cameras to record the view of the target object from two different angles

- The depth, accuracy, and resolution are dependent on the distance between the two cameras

- It is suitable for measuring large distances in an outdoor environment

- Laser Triangulation: It uses a laser and a camera to define a spatial triangle to obtain the 3D coordinates

- A camera arranged at a fixed angle to the laser emitter records an image of the laser line as the object’s geometric shape deflects it. Thereby capturing an accurate profile of the object at a point in time

- It measures distance by recording a single scene from two points of perspective

- Structured Light: It uses a camera and laser light to provide an entire 3D image of the object, and is also known as the full-field method

- It projects a known pattern onto a scene and then captures it with a camera system

- When it hits a 3D structure object, it deforms and reconstructs its shape using vision algorithms

- TOF (Time-of-flight): It emits powerful pulses of light to detect obstacles in its path by measuring the round-trip time of photons that bounce back from objects. It uses two different techniques:

- Pulsed Laser Time-of-Flight (Pulsed TOF) measures the time delay between the emitted laser pulse and the reflected received pulse from the object

- Amplitude Modulated Continuous Wave Time-of-Flight (AMCW-TOF) calculates the phase shift of a received amplitude-modulated light signal emitted by an active light source using a reference signal and provides per-pixel distance information

- Frequency Modulated Continuous Wave LiDAR (FMCW LiDAR) provides a powerful coherent vision system that depends on low-power frequency chirps from a high-coherence laser

- FMCW LiDAR captures tiny frequency shifts induced by object movements. It also provides information about the material and surfaces sensed via polarization changes

- FMCW LiDAR technique features a large optical bandwidth and high sensitivity that enables the 3D vision systems to capture more and finer details of an object

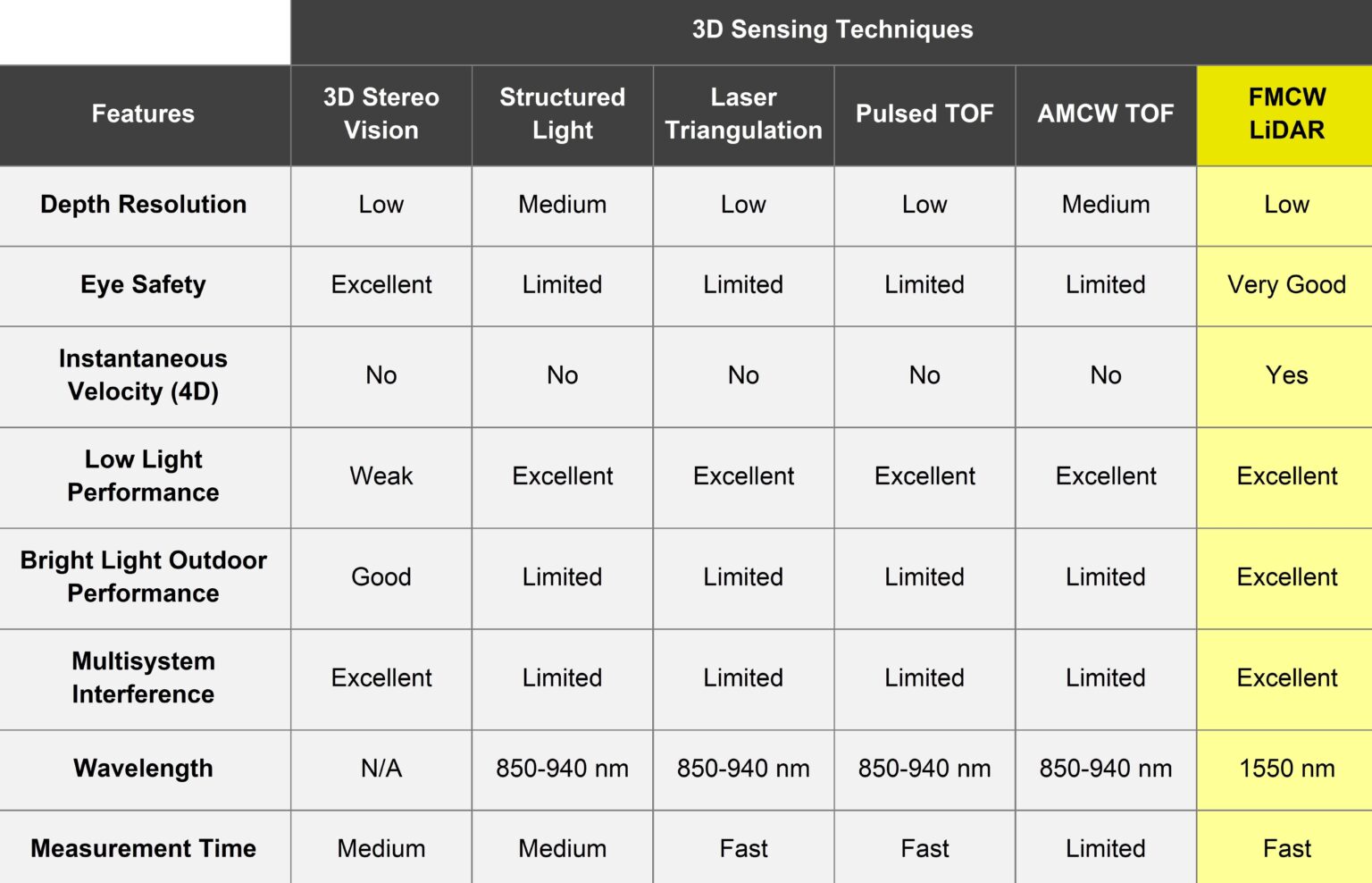

The table below compares different 3D sensing techniques based on various parameters:

The above table shows that the FMCW LiDAR technique is better than the other 3D sensing techniques. It allows higher detection sensitivity and accuracy due to coherent detection with excellent low-light and bright-light outdoor performance and multisystem interference.

FMCW LiDAR Technique for 3D Vision

FMCW sensing is becoming a viable alternative for 3D vision systems of the future due to the advancements in low-noise optical signal processing circuits, long-coherence-length lasers, low-cost operations, etc.

This sensing promises to enhance performance vectors in several directions. Moreover, higher precision scanning at longer distances, being eye-safe, and immune to multisystem crosstalk or outdoor lighting conditions. It offers native 4D vision by providing velocity information with every measurement.

It measures the distance and velocity of moving objects, achieved by continuously varying the frequency of the transmitted signal by a modulating signal at a known rate over a fixed time.

FMCW LiDAR offers advantages such as high sensitivity, long detection distance, provides anti-background interference, and higher precision scanning at a longer range. The most significant advantage of FMCW is the integration of all components on a single photonics chip, which is only possible with FMCW in the short-wave infrared (SWIR) band.

How Does It work?

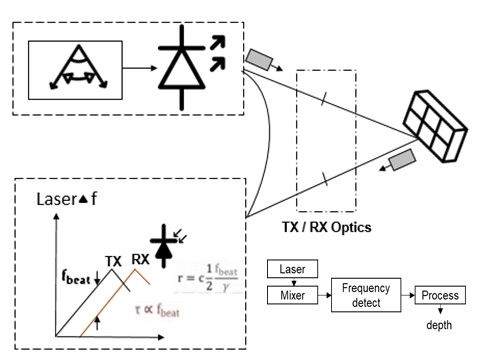

The 3D coherent vision FMCW technique works on a principle of coherent photons that travel hundreds of meters, interact and pick up features of the target, then return while remaining in a coherence state where it merges with a portion of the outgoing light for near-lossless amplification. The procedure covers the following process:

- Firstly, a low-power frequency-modulated chirped pulse is emitted from a Laser source. Also, the object reflects a returning chirp with a frequency and velocity Doppler shift.

- The frequency shift between the returning chirps is proportional to the distance and velocity of the object. Here, dual chirps (i.e., up and down chirps) resolve range and velocity.

- The reflected chirp contains the measurement point distance in a frequency shift. The reflected chirp further adds a Doppler frequency shift if the measurement point also has a radial velocity. The radial velocity with every pixel effectively provides a 4D image.

- The photonic circuitry of FMCW LiDAR mixes a portion of the outgoing coherent laser light with the received light. It also provides coherence amplification and detects frequency difference and depth.

Open Research Challenges Related To FMCW LiDAR Technology

When implementing the FMCW technique of 3D sensing various applications, it faces multiple challenges in maintaining low cost for the long-range device and processing with lasers with long coherence lengths (narrow linewidths) in obtaining very accurate and low-noise optical signal processing circuits, maintaining stability and linearity in the laser source’s wavelength, for high-speed data collection and accuracy, etc. Some of the significant challenges faced by the solution developers are below:

- Covering Long-range speedily: FMCW LIDAR is technically complex in properly managing narrow-linewidth frequency-agile lasers. Moreover, it limited its successful parallelization and reduced the speed of long-range 3D sensing coverage.

- Low 3D Frame Rate in recognizing dynamic scenes: FMCW LiDAR systems suffer from a low 3D frame rate, which restricts their applications in real-time imaging of dynamic scenes

- Low Field of View (FoV): The FMCW LiDAR system uses a solid-state scanner to receive the reflected light pulses from the objects. The solid-state scanner’s user restricts the system’s field of view.

- Dynamics Distance Measurement: Detection of extremely fast-moving objects using FMCW LiDAR requires high sampling rates and precise dispersion matching, which is difficult to achieve with the existing range extraction techniques.

- Spectrum Aliasing and Leakage: During the signal spectrum measuring process in FMCW LiDAR systems, the hardware and surroundings generate a lot of noise, which impacts the received echo signal and causes spectrum aliasing and leakage.

3D Sensing Technology: Research Organizations

A few research organizations are working to overcome the open research challenges related to FMCW LiDAR systems. They want to use its advantageous features for 3D vision applications, such as autonomous vehicles and robotics. It also includes augmented reality, virtual reality, gaming, and others. A few of the solutions related to the open research challenges are mentioned in the table below:

Swiss Federal Institute of Technology Lausanne: Researchers at the lab of Tobias Kippenberg at EPFL implement a parallel FMCW LiDAR engine by using integrated nonlinear photonic circuitry to increase speed for covering an extended range of the FMCW LiDAR system.

- This approach focuses on planar nonlinear waveguide platforms with high-quality silicon-nitride micro-resonators with shallow losses.

- The continuous wave laser light is turned into a stable optical pulse train by coupling a single FMCW laser into a silicon-nitride planar micro-resonator. It also helps to cover long-range speedily.

Duke University: A researcher at Duke University uses non-mechanical beam scanners with no moving parts for high-speed imaging that enhances the frame rate of the FMCW LiDAR for the detection of objects in a 3D vision system to recognize dynamic scenes.

- A high-speed FMCW-based 3D imaging system, combining a grating for beam steering with a compressed time-frequency analysis approach for depth retrieval.

- The optical phased arrays (OPA) achieve beam steering by controlling the relative phase of coherent optical emitter’ arrays. It also allows compact solid-state LiDAR with MHz beam scanning bandwidths.

3D Sensing Technology: Recent innovations with FMCW LiDAR

The Coherent 3D vision using FMCW techniques provides a solution for manufacturers building. Also, the next generation of autonomous vehicles, security solutions, and industrial robots. It can also be handy for drone navigation and mapping applications.

Below are some innovations done by various market players:

Aeva develops FMCW 4D LiDAR that enables the fully automated manufacturing process to meet the sensing and perception needs of various autonomous applications at a mass scale.

- Its advanced 4D perception software detects instant velocity position with automotive-grade reliability. It does that with a compact form factor and up to 1000 lines per frame with ultra-resolution to enable the next wave of autonomy.

Lumentum developed the FMCW LiDAR optical sub-assembly to solve the challenges associated with long-range LiDAR.

- It integrates all optical elements, including the laser, laser monitor, detector, mixer, receiver, scanning mirror, and circulator. Also, it helps reduce the cost, size, power consumption, and complexity of FMCW LiDAR

SiLC Technologies has introduced a chip-integrated FMCW LiDAR sensor to deliver coherent 3D vision.

- It enables robotic vehicles and machines to have the necessary data to perceive and classify their environment. Moreover, it helps them predict future dynamics using low-latency, low-compute-power, and rule-based algorithms.

Conclusion

3D vision sensing system using the FMCW technique expands the performance characteristics on a higher level. Due to technological advancements, 3D vision is becoming a critical technology in many application areas. It includes industrial manufacturing automation, autonomous vehicles, and medicine, to enhance productivity, efficiency, and quality.

In the future, more advanced techniques will be established for a 3D vision system. That will help overcome challenges faced by FMCW techniques, like faster imaging speed, higher cost, maintaining more stability and linearity, and many more. It will provide machines’ actual vision with a scalable, lightweight, affordable solution. Hence, enabling the measurement of any object’s sweeping motion with higher accuracy without any prior training.

Let's Take the Conversation Forward

Reach out to Stellarix experts for tailored solutions to streamline your operations and achieve

measurable business excellence.