Role of AI in Enhancing Hearing Devices

Artificial Intelligence (AI) is rapidly being integrated into hearing device design and manufacturing, enhancing the devices’ utility and value. AI enhances hearing devices by providing improved and more personalized audio benefits, as well as enhanced health monitoring capabilities. The technology of hearing aids has undergone significant development, progressing from simple auditory amplification to more sophisticated digital sound processors. Previously, hearing aids integrated sound and amplified it, but AI now enhances these gadgets with advanced computation that adapts to environmental changes, distinguishes voices, and tailors to individual hearing preferences. Originally, hearing aids would amplify sound, but with the advent of unique digital features, modern hearing aids offer improved sound quality and additional capabilities.

Noise Reduction and Sound Clarity

AI algorithms enhance noise cancellation techniques by incorporating other contemporary approaches, such as adaptive filters and real-time audio signal processing. Many of these algorithms are used to control noise cancellation, which is continuously monitored to achieve higher accuracy levels in eliminating background noise. Unpredictable and complex noise patterns are forecasted and addressed in machine learning models, enabling them to reduce noise more effectively than earlier techniques. This means that machine learning offers flexibility in terms of sound clarity and quality as it processes audio signals in real time.

AI algorithms help suppress unwanted signals, such as background noise, interference, echoes, and distortions, while amplifying the desired signals, including speech and music. Such models have improved due to the large amounts of data that machine learning processes can gather, providing more precise sounds in various audible environments. Additionally, these models serve as platforms to deliver audio more closely aligned with the audience’s preferences, including their current state of hearing. Deep learning, in particular, enhances the audio purification, spatial setup, and dynamic range of sound, further improving the perception of the listening experience.

Adaptive Sound Environments

By utilizing real-time data to adjust sound settings according to the user’s surroundings, artificial intelligence enhances audio experiences. It dynamically adjusts equalization, noise cancellation, and volume levels by continuously analyzing variables such as speech patterns, acoustics, and background noise. AI systems learn from user preferences and environmental changes to deliver personalized sound profiles that enhance comfort and clarity. AI constantly observes and adapts to its surroundings to ensure audio equipment emits crisp, high-quality sound while minimizing distractions, thereby improving the overall listening experience.

Personalized Hearing Experiences

With AI-driven customizations, hearing aids can be tailored to a user’s unique preferences and hearing profile by assessing their hearing loss pattern. These intricate algorithms process and store data from noise reduction, sound frequencies, volume levels, real-time acoustic settings, and hearing tests. Using AI, these will be dynamically optimized in response to user feedback and environmental changes for a truly personalized hearing experience. This technology enhances clarity and comfort, enabling one to better adapt to different sound environments and achieve greater overall effectiveness from their hearing aids. This level of precision allows users to achieve their best hearing performance and satisfaction.

Speech Recognition and Enhancement

AI techniques for better speech recognition, such as:

- Deep Neural Network: Deep neural networks, particularly convolutional and recurrent neural networks, can significantly enhance the quality of speech recognition, thereby improving this field. Such models illuminate the various challenging aspects of speech patterns and classify errors and real speech.

- Data Augmentation: This approach involves incorporating noisy, fake data into the training dataset. By training the model under various noisy conditions, it becomes more robust and thus performs better in real-world noisy environments.

- Beamforming: Beamforming is a unique technology that utilizes multiple microphones to capture audio signals from distinct directions and focus on the direction of the speech source. Usually, the collected voice message contains several types of distracting noise that compete for the limited communication channels.

- Adaptive Filtering: Adaptive filters instantly adjust their rates to compensate for noise. Such filters are primarily used in environments with varying forms of sound pollution over time.

- Noise Reduction Algorithms: Algorithms such as spectral subtraction, Wiener filtering, and Kalman filtering help remove ambient noise from an audio signal, enhancing speech recognition. As a result of noise elimination, the system’s focus on speech elements becomes more precise.

Integration with Other Technologies

AI-powered hearing aids can be paired with smartphones and other devices via Bluetooth, smartphone apps, and the Internet of Things (IoT). By allowing direct audio streaming from devices such as phones, tablets, and TVs, Bluetooth enhances the listening experience for calls, music, and movies. Mobile apps provide users with control over their hearing aids, offering features such as program selection, volume control, battery monitoring, and remote access to audiologists’ support. Additionally, IoT connectivity enables smart home equipment to communicate with hearing aids, enhancing safety and convenience while sounding alarms. These integrations collectively enhance user control, real-time sound adjustments, and overall accessibility.

Future possibilities of connected and smart hearing aids:

- Augmented Reality (AR) Integration: Combining hearing aids with AR for enhanced spatial awareness and contextual audio information.

- Customized Sound Profiles: Ability to create and adjust personalized sound profiles based on individual preferences and hearing needs.

- Adaptive Soundscapes: Personalized sound environments that adjust automatically based on the user’s location and activity, such as adjusting for quiet or noisy settings.

- Remote Diagnostics and Support: Enhanced capabilities for remote troubleshooting, updates, and adjustments by audiologists without in-person visits.

Future Trends and Innovations

Future hearing aids will feature AI-driven adaptive sound, real-time translation, health monitoring, seamless connectivity, personalized profiles, and enhanced privacy, thanks to advancements in neuromorphic computing and the integration of wearable technology.

- Neuromorphic Computing Techniques: Neuromorphic chips enable hearing aids to process sound more efficiently and adaptively in real time, distinguishing between speech and background noise with greater accuracy. This technology enhances hearing experiences by enabling devices to dynamically adjust settings in response to changing acoustic conditions. Additionally, neuromorphic computing offers a higher level of personalization, learning from user interactions and preferences to create more intuitive and effective hearing solutions for diverse listening environments.

- 5G Integration: Due to 5G’s high-speed data transfer capabilities and low latency, hearing aids can be streamed in real-time from multiple devices, including smartphones, TVs, and public address systems. Changes and clear, seamless communication across different devices and services are made possible by this. Additionally, 5G enables innovative features such as remote audiology consultations and diagnostics, which facilitate wireless data transmission from hearing aids for professional assessment and timely adjustments.

- Quantum-Resistant Algorithm: Hearing aids should feature quantum-resistant algorithms to protect user data from potential threats posed by quantum computers. These advanced algorithms ensure the protection of private data, including health indicators and music preferences, unlike traditional encryption methods that quantum computers may be able to decipher. These state-of-the-art algorithms are used in hearing aids to boost security against emerging risks from quantum computing.

- Electrocochleography: Electrocochleography (ECoG) is a cutting-edge diagnostic technique that measures the electrical potentials in the inner ear (cochlea) and auditory nerve in response to sound. Integrating ECoG sensors into hearing aids represents a significant advancement, enabling real-time monitoring of cochlear function. This innovation provides critical data on the auditory system’s performance, allowing the precise tuning of hearing aids to the user’s hearing profile and thereby enhancing sound quality and speech comprehension.

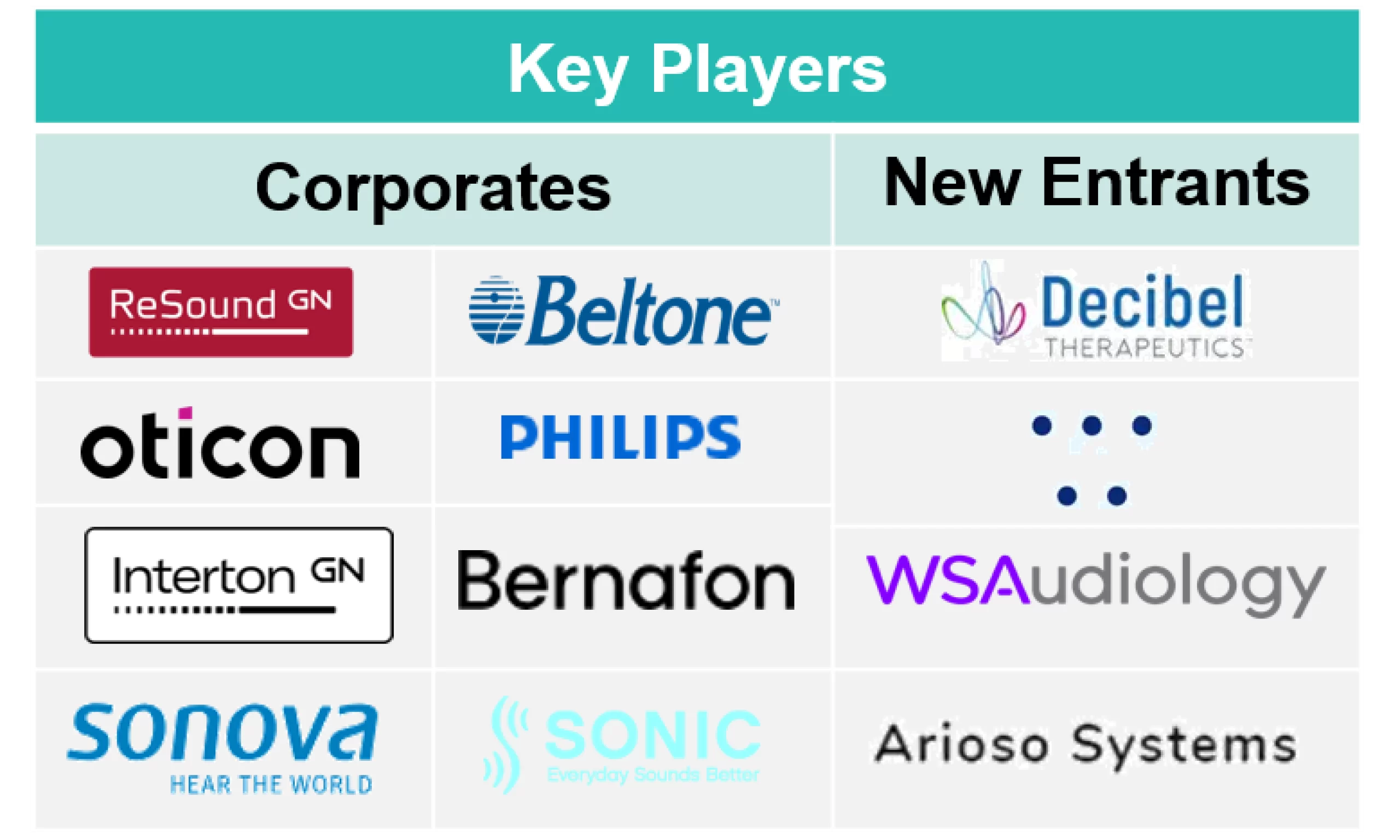

Market Leaders and New Entrants

Resound has made notable advancements in hearing aid technology, enhancing the user experience through innovative features and digital upgrades. Key improvements include advanced wireless technology for seamless connectivity with smartphones and other devices, enabling direct audio streaming and control via the ReSound Smart 3D app. The LiNX Quattro platform offers superior sound quality with a full frequency range and enhanced clarity in challenging listening environments. Additionally, ReSound hearing aids feature health-monitoring sensors that track user activity and provide insights into overall well-being. These developments underscore ReSound’s commitment to delivering versatile, adaptive, high-quality hearing solutions.

WSAudiology has led the way in the development of hearing aid technology, focusing on enhancing user experience and auditory wellness. One of their accomplishments is advanced digital signal processing, which improves sound quality and reduces background noise to create a more natural listening experience. The hearing aids’ seamless Bluetooth connectivity enables users to stream music and adjust settings with specific apps. Machine learning algorithms adapt to the user’s environment and preferences, delivering a personalized hearing solution tailored to their individual needs. Additionally, their miniaturization efforts, exemplified by the Signia Insio IX, have produced smaller, discreet devices that maintain high performance and battery life.

Conclusion

AI has excellent potential to significantly influence the features, individual devices, and user experience of hearing aids. New opportunities, including real-time processing and adaptability to diverse acoustic environments, are made possible by AI, along with precise and more natural-sounding algorithms. Self-adjustment will be made possible by machine learning, where machines will learn about a user’s preferences and automatically adjust the hearing aid settings for optimal hearing in a given environment. AI, therefore, goes further in improving speech recognition and noise reduction in a manner that builds on the level of understanding and quality of life for those with severe disabilities.

Additionally, with the aid of AI, connections with other smart devices are established to enable more advanced health monitoring and language translation capabilities. While constantly listening, interpreting, and adjusting to the auditory signals, hearing aids make the solution significantly more refined and helpful, ensuring a substantial improvement in the quality of life for such individuals.

Let's Take the Conversation Forward

Reach out to Stellarix experts for tailored solutions to streamline your operations and achieve

measurable business excellence.