In the present scenario, Artificial Intelligence (AI) is an essential part of our lives, as it offers accessibility and convenience with advancements in multimodal AI and large language models (LLMs) in applications like chatbots, coding assistance, or any other assistance, including in self-driving cars, due to its pervasive nature and capability. However, due to the vast data and learning models, it’s often challenging for the people who create and implement AI in any application to detect patterns or predict future trends. Also, incorrectly handling AI risks leads to multiple privacy and security concerns.

The shortage of transparency and security has led to the development of AI TRiSM (Artificial Intelligence Trust, Risk, and Security Management). This article will explore the details of AI TRiSM and its significance in our rapidly evolving world, which has prominent open research challenges that need to be overcome in the near future.

What is AI TRiSM?

AI TRiSM is an innovative framework that focuses on building reliable and secure AI systems and serves as a base for AI Model governance, transparency, fairness, efficacy, privacy, data protection, trustworthiness, security, reliability, and compliance with regulations.

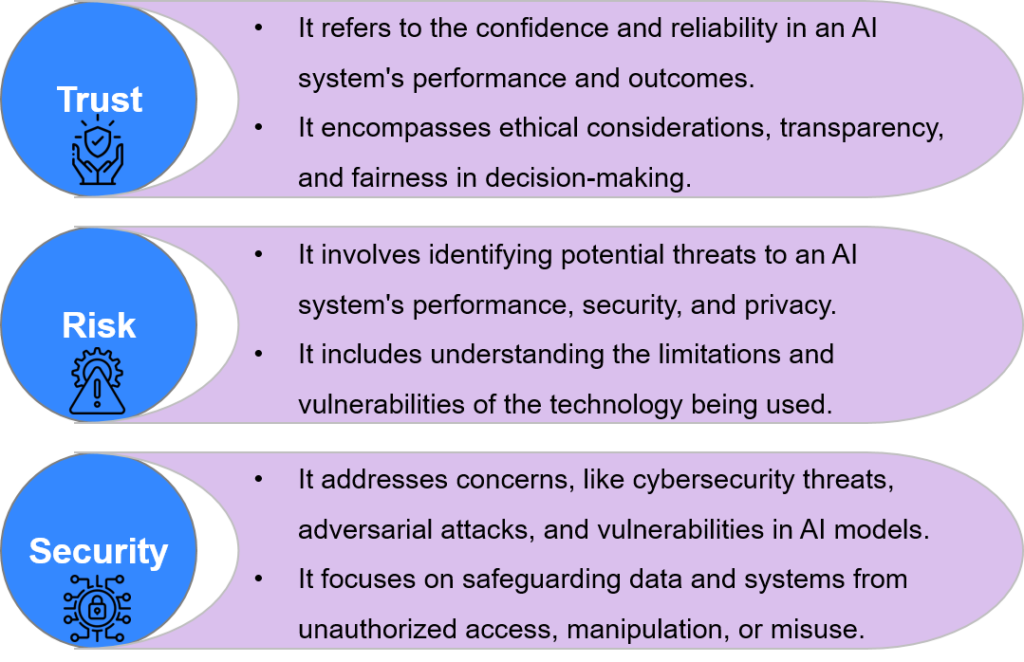

Figure 1: Describes the potential individual features for Trust, Risk, and Security Management

The framework aims to ensure appropriate safeguards, dependability, and governance to avoid inappropriate AI use by enhancing service delivery, improving efficiency, and optimizing resource utilization through intelligent automation and data-driven insights.

It is also important for organizations and businesses to boost trust in AI systems, streamline their service management processes, automate repetitive tasks, mitigate potential biases, and predict potential risks before they emerge, protect sensitive data, and significantly provide proactive solutions to customers to enhance their satisfaction and loyalty.

AI TRiSM Framework

AI TRiSM is the combination of five pillars or principles that lays the foundation of AI Trust, Risk, and Security Management. The five pillars are:

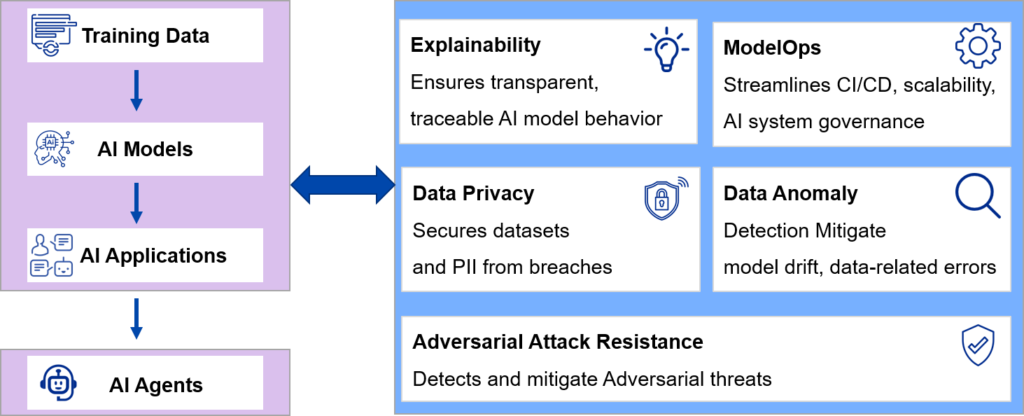

Figure 2: AI TRiSM pillars to deliver managed trust, risk, and security for AI systems

- Explainability: It refers to developing transparent and human-understandable AI systems, models, or algorithms that clearly describe how an AI model processes information to make decisions. It allows users to interpret reasoning for its results, ensuring trustworthy, fair, and ethical outcomes.

- ModelOps: It includes processes and tools for developing, deploying, managing, monitoring, refining, testing, and updating an AI model throughout its end-to-end lifecycle. It ensures the AI model maintains quality and continuously optimizes its performance by handling issues such as model drift, bias, and unintended consequences.

- Data Privacy: AI systems data must be secured to ensure the accuracy, privacy, integrity, and confidentiality of sensitive information throughout its lifecycle. Protecting data against unauthorized access, modification, or breach involves measures such as encryption, access controls, and data anonymization to ensure regulatory compliance and governance, including the General Data Protection Regulation (GDPR).

- Data Anomaly Detection: AI data results in abnormal, inaccurate, inconsistent, potentially risky outcomes, such as biased results, and further involves drift monitoring, errors, or difficulties. The AI TRiSM aims to improve AI data visibility performance, ensure data anomaly detection, and maintain AI systems integrity by identifying real-time irregularities, mitigating training data-related errors, actively addressing potential threats, and others.

- Adversarial Attack Resistance: Protecting AI systems from adversarial or malicious attacks or threats that use data to disrupt machine learning algorithms and alter AI functionality. The resistance to protect against these attacks includes robust security measures such as adversarial training, defensive distillation, model ensemble, and feature squeezing to guard against tricking the model, altering data, stealing model information, data poisoning attacks, etc.

Prominent Techniques to Implement AI TRiSM

A few prominent techniques are implemented in the AI TRiSM to improve the AI system’s transparency, fairness, accountability, and security. Human-understandable techniques such as Explainable AI (XAI), including LIME and SHAP, are used to help AI systems in the decision-making process. Further, to maintain transparency and accountability, techniques, such as ethical AI frameworks, are developed and deployed without biases.

Below are the prominent key algorithms and techniques implemented in the AI TRiSM.

- Local Interpretable Model-Agnostic Explanations (LIME)

Functionality: LIME is a technique that explains the individual predictions of any machine learning classifier by approximating it locally with an interpretable model.

Process:

- Local Model: It then trains a simple, interpretable model (like linear regression) on this perturbed data to approximate the complex model’s behavior around the specific instance.

- Sampling: LIME perturbs the input data and observes the changes in predictions.

- Interpretation: The local model’s weights provide insights, such as which features influence the original model’s prediction.

2. SHapley Additive exPlanations (SHAP):

Functionality: SHAP provides a unified measure of feature importance for any machine learning model.

Process:

- Shapley Values: Derived from cooperative game theory, they represent the average contribution of a feature across all possible combinations

- Feature Attribution: SHAP values can be used to attribute an instance’s prediction to its features, ensuring a fair and consistent explanation

- Visualization: SHAP offers various plots (e.g., force plots and summary plots) visually representing feature contributions

3. Disparate Impact Analysis

Objective: Evaluate if decisions disproportionately affect certain demographic groups.

Technical Steps:

- Calculate Outcome Rates: Compute the rate of favorable outcomes for different groups (e.g., hiring rate for men vs. women)

- Compare Rates: Measure the ratio of these rates between groups

- Threshold: A common threshold for disparate impact is 0.8 (80%). Potential bias is indicated if the ratio is lower than this

4. Counterfactual Fairness

Objective: Ensure outcomes do not change when sensitive attributes are altered

Technical Steps:

- Generate Counterfactuals: Create counterfactual scenarios by altering sensitive attributes (e.g., race, gender)

- Compare Outcomes: Check if the outcomes remain consistent in the counterfactual scenarios

Open Challenges in AI TRiSM

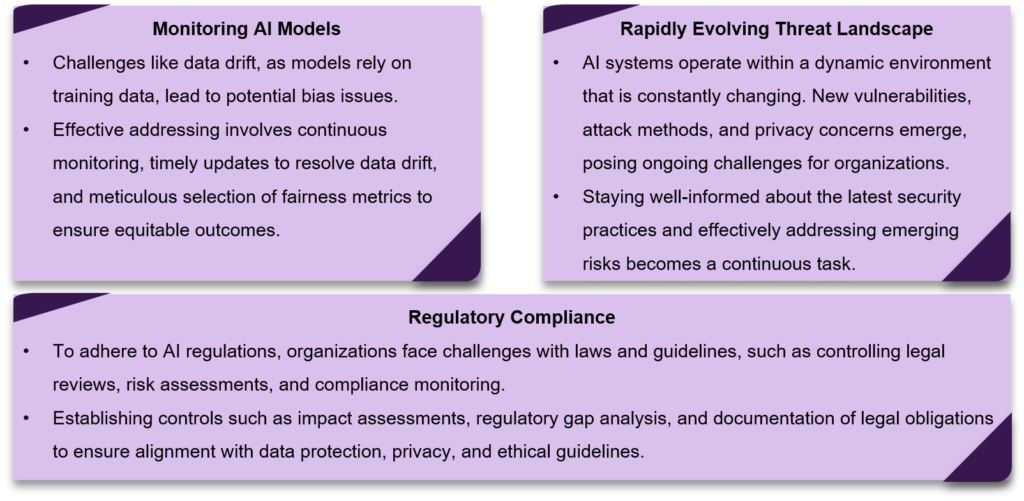

Despite the usefulness of the AI TRiSM framework, some prominent challenges remain before its adoption, such as navigating the evolving threat landscape, complying with regulations, or monitoring the AI models. Figure 3 describes the challenges in brief.

Figure 3: AI TRiSM Open Challenges

The market for AI TRiSM is observing activity, with various companies, such as IBM, Accenture, Wipro, and others, collaborating to provide innovative solutions, such as introducing platforms that integrate AI TRiSM for various applications.

Prominent Players

The AI TRiSM technology industry consists of many established, active companies contributing to advancing efficient and sustainable energy management solutions.

Figure 4: AI TRiSM Prominent Key Players

Recent Activity

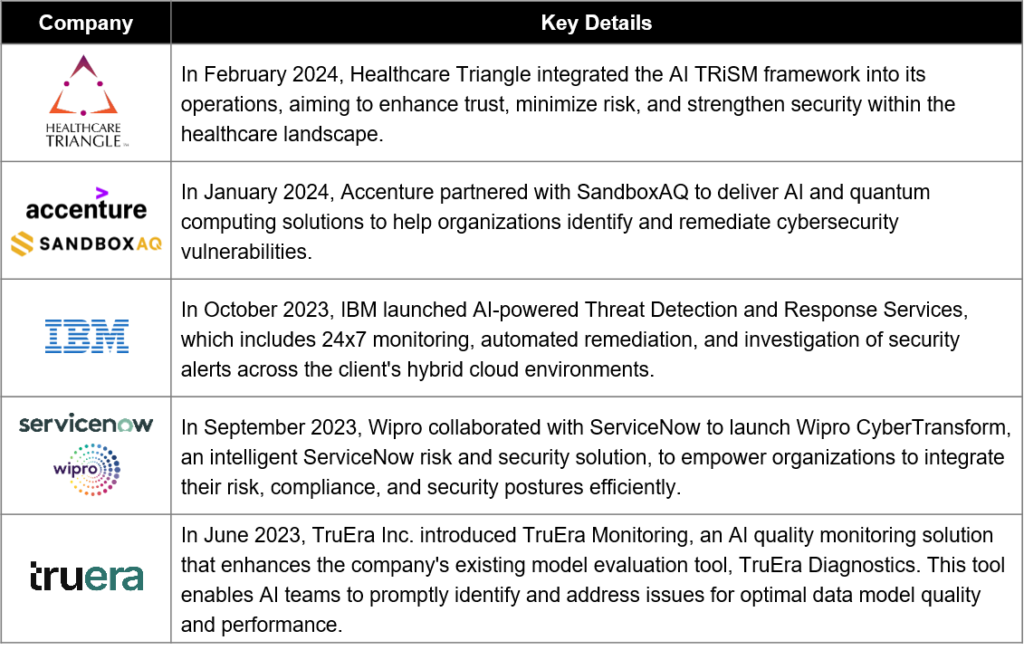

The AI TRiSM market is witnessing dynamic activity, with various companies forming partnerships, mergers, and collaborations. Some of the key recent activities are briefly described below.

Table 1: Recent Market Activity Related to AI TRiSM

Conclusion

The integration of AI in varied applications will only increase, and so will the importance of integrating AI TRiSM to enhance data security, privacy standards requirements, safety priorities, transparency and accountability, and, altogether, expectations from AI systems. Another consideration that emphasizes the importance of AI TRiSM is its increasing benefits to businesses in terms of reliability, cost reduction, stability, and safety.

The future of AI TRiSM, like multimodal AI is bright, as it can help us achieve trust and reliability in AI, monitor risk, and prevent unsecured and unreliable outcomes. Also, integrating other technologies, such as the Internet of Things (IoT), quantum computing, federated learning, and edge computing, will only enhance the importance of AI TRiSM in the future.